If you’ve been following the rapid evolution of AI language models, you’ve probably heard a lot about GPT4o, Claude Sonnet 3.5, and the various OpenAI offerings. But there’s a new name in town that’s quietly shaking up the ecosystem: Deepseek. Developed in China, Deepseek is an open-source LLM (Large Language Model) series that boasts two flagship models, transparent training details, and an MIT license—meaning you can build commercial AI products without complicated licensing restrictions.

Below, we’ll explore how Deepseek stacks up against more established (but closed-source) alternatives, why its pricing can be a game-changer, and what sets it apart as possibly the first true open-source competitor in the LLM arena.

A New Standard for Open-Source AI

Deepseek is not just open-source in name—it fully discloses:

The training data used

The techniques and methodologies employed

Licensing under MIT, making it simple to adapt into commercial solutions

That level of transparency and permissiveness in licensing is a big deal. While many “open-source” models tend to come with limitations or partial disclosures, Deepseek is arguably the first to check all those boxes simultaneously. As a result, developers and enterprises can rely on it with more freedom, lower overhead, and (crucially) the ability to iterate without fear of locked-down code.

Meet the Flagship Models

Deepseek currently showcases two main versions:

Deepseek-v3

Deepseek-r1

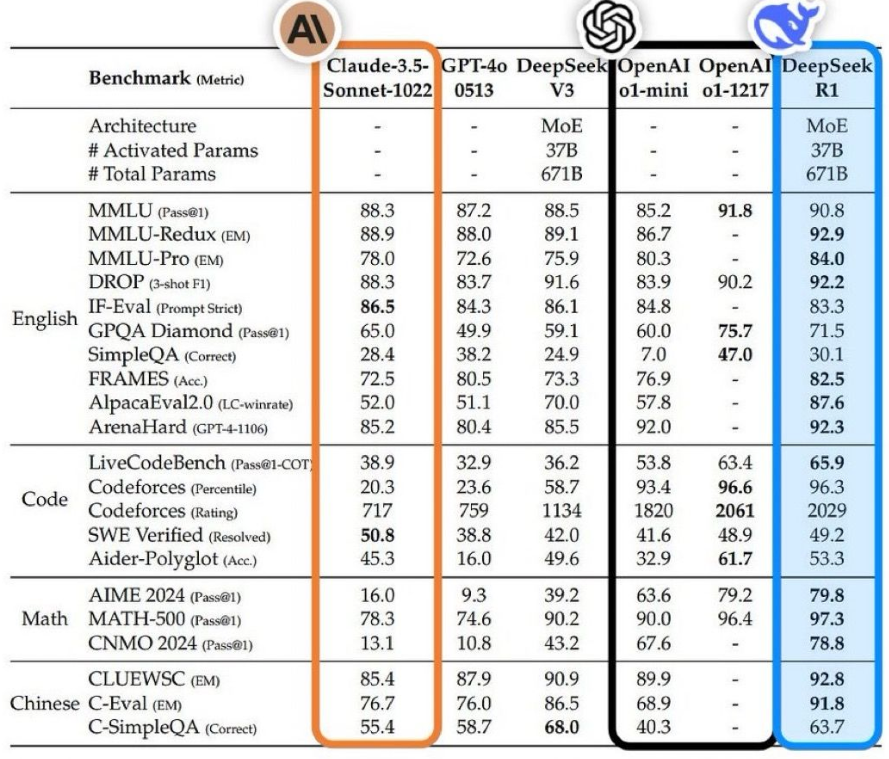

Deepseek v3 competes directly with GPT4o and Claude Sonnet 3.5 in areas like language comprehension and general problem-solving (as measured by the MMLU benchmark). It’s proven itself by outperforming GPT4o and Sonnet 3.5 on MMLU while being ten times cheaper. That’s a bold claim in a space where cost can kill even a strong model’s viability.

Deepseek r1 is their specialized “reasoning” model. It aims to match and, in some cases, beat the performance of OpenAI’s o1 model—particularly in advanced logical tasks. While it doesn’t surpass everything about o1, it does outshine o1-mini and occasionally edges out the full o1. The kicker? It’s twenty times cheaper than o1. That alone might tilt many heads toward a more open and cost-effective solution.

Pricing Face-Off

OpenAI’s top-tier models are known for their capabilities but also for relatively high usage costs. Deepseek turns that dynamic on its head. Let’s look at the numbers:

Deepseek v3 API

Input: $0.27 / 1M tokens (cache miss), $0.07 / 1M tokens (cache hit)

Output: $1.10 / 1M tokens

GPT4o API

Input: $2.50 / 1M tokens

Output: $10.00 / 1M tokens

Claude Sonnet 3.5

Input: $3.00 / 1M tokens

Output: $15.00 / 1M tokens

Deepseek v3 is drastically cheaper. And it doesn’t stop there—Deepseek r1 is even more aggressive:

Deepseek r1 API

Input: $0.55 / 1M tokens (cache miss), $0.14 / 1M tokens (cache hit)

Output: $2.19 / 1M tokens

OpenAI o1 API

Input: $15.00 / 1M tokens ( $7.50 / 1M if cached )

Output: $60.00 / 1M tokens

OpenAI o1 Mini API

Input: $3.00 / 1M tokens ( $1.50 / 1M if cached )

Output: $12.00 / 1M tokens

Seeing that difference in black and white, Deepseek r1 can be up to 20 times cheaper than OpenAI’s standard o1. For startups and enterprise teams running huge volumes of tokens through LLMs, cost quickly becomes a critical factor.

One Caveat: Best for Reasoning, Not for Coding

Sonnet 3.5 still holds the crown for coding tasks, and GPT4o is widely praised for logic and math. Deepseek doesn’t claim to be perfect in all areas—Deepseek r1 is excellent in many reasoning tasks and cheaper than the top-tier alternatives, but there may be domains (especially code generation or advanced math) where GPT4o or Claude’s coding models still pull ahead.

However, if you’re looking to cover general reasoning and complex language understanding at a fraction of the cost, Deepseek is in prime position.

Easy Access and Great UX

Unlike many open-source projects that can be difficult to test or adopt, Deepseek has made user-friendliness a priority:

Chat Portal: Head to chat.deepseek.com for a ChatGPT-like interface. No more messing around with CLI tools to quickly see how it handles your prompts.

Developer Platform: Their API is at platform.deepseek.com, featuring an OpenAI-compatible SDK for simple integration. If you’ve worked with GPT or Claude APIs, you’ll feel right at home.

Key Takeaways

Truly Open Source Deepseek’s MIT license and transparent training details are a game-changer—no hidden gotchas.

Impressive Benchmarks Deepseek v3 competes with GPT4o, outperforms it in MMLU, and is 10x cheaper. Deepseek r1 rivals OpenAI o1 while being 20x cheaper.

Low-Cost, High-Impact For large-scale usage, an order-of-magnitude difference in pricing could redefine your AI budget.

Emerging Ecosystem The sign of a healthy open-source project is how easy it is to adopt—and Deepseek nails that.

In short: Deepseek might be the first fully open-source LLM that can truly challenge bigger closed-source names like GPT4o, Claude Sonnet, and OpenAI’s o1. It’s not necessarily the best at everything, but its cost-to-performance ratio and open license could open up new frontiers in AI development—especially for teams eager to avoid black-box solutions and big monthly bills.

The Future of AI Looks Bright—And Cheaper

Deepseek’s rise highlights a trend in AI: the movement toward more transparent, open-source solutions with robust performance. While it doesn’t unseat every top-tier proprietary model in every domain, it proves that the open-source community can produce serious contenders—and do so at a compelling price point.

For developers, researchers, or businesses on a budget, Deepseek offers an intriguing opportunity to harness advanced language reasoning without paying the premium that typically comes with big-name, closed-source APIs. Given how quickly AI evolves, it’s anyone’s guess how soon we’ll see more open-source releases that push the envelope even further. For now, Deepseek’s story is a milestone worth following

Comments